Bringing Data Together

/As mentioned in a previous post, I am in the process of updating the mathematical model used by my school to determine when students are ready to take college-level courses. This model is important to us because we send over a third of our juniors and half of our seniors to college each year and we don’t want to mistakenly send students to college before they are ready. Using this model, my team has gotten pretty good at determining readiness; last year our students passed 97% of the college courses they attempted.

Before the model can be applied, it must first be brought together into a single database or spreadsheet. Depending on your systems, this can be a quick or timely endeavor. For me, bringing together all of the data we have on students took a little over six hours. Here’s what I did:

Google Sheets

Because it is shareable and applies edits in real-time, I do all of my modeling in a single Google Sheet. For anyone who is an Excel devotee, this may sound crazy. It is. But, for me, the benefits outweigh the costs.

For this year’s update, I created a new Google Sheet called “Master Data File” where I pasted an export from our Student Information System (SIS) containing each student’s name, ID, DOB, sex, graduation year, and commutative GPA. Because our SIS contains the most up-to-date information regarding student enrollments, I always start there and then use that data as reference for gathering the rest. No need to gather data on a student no longer enrolled.

Microsoft Excel

So far, there is only one function I need that is not easily done in Google Sheets: consolidating data. At one time, I would spend hours manually inputting data from one system’s export file to another. Excel can consolidate data from two spreadsheets in minutes.

The Consolidate function is in the "Data" ribbon on Microsoft Excel.

For example, data downloaded from the College Board website looks different than data taken from our SIS. The College Board data includes some students who have left my school, is missing data for students who are newly enrolled, and may have other formatting differences that would make a simple copy/paste impossible to do.

As long as I have a single column that uniquely identifies individual student (student ID, “Last Name, First Name” combinations, etc.), Excel can consolidate the data from both sources into a single row to be included in the master file.

Data Brought Together

Here’s the data I consolidated into the single Google Sheet for each student organized by source:

Student information System

- Demographic Information used for sorting and aggregated data analysis

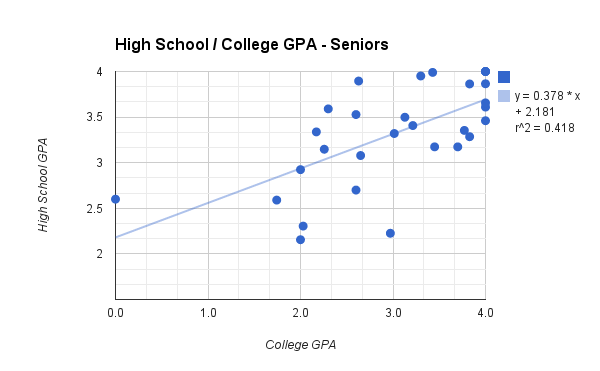

- High School Grade Point Average: used as a primary indicator of future college success. This topic will be expanded upon further in a later post.

College Board

- PSAT 8/9, 10, and 11: We give the PSAT to all students every year in grades 8 through 11. While we do not yet use this data in our model, I decided to pull it in hopes of future analysis and reporting.

- SAT: In Michigan, all 11 graders are required to take the new SAT. Our community college partner accepts SAT scores for determining college course placement, so we use these scores as part of our readiness model.

- Accuplacer: While this is technically a College Board product, we get this data from our college partner. Our students take this college placement assessment each year until they place into college-level coursework beginning in the 9th grade.

ACT

- ACT: Now that the state of Michigan has moved from ACT to the SAT for it’s college readiness assessment, we only have a few students each year who take this assessment. For those who do, though, I need to consider their scores when determining readiness.

- Compass: Until this year, our college partner used the ACT’s Compass assessment for determining college placement. This assessment was replaced by Accuplacer but we still consider Compass data in determining students’ college readiness.

Other

- Agency Score: Each year, we ask our teachers to rate each student’s skill at exercising agency on a scale of 0-5. Agency, for those not familiar with the concept is one’s ability to be an “agent” of his or her own learning. It consists of two components, both a part of our instructional model: 1.) ability to complete tasks to specification and on time, and 2.) growing from challenging work and setbacks. I simply ask teachers to rate each student and take the average of their input. More on this measure of college readiness later.

When recording assessment data, I like to separate it by the year it was taken relative to the student. I like to know what each student’s score was each year they took it. This allows me to see growth or stagnation in student performance, and makes analysis and reporting of data much easier to do.

Next up: what I do with this data once I have it all in one location.